How do Large Language Models do Maths?

We think of LLMs as language models that can write words very well and are trained to reply to natural language prompts, so how is it that they can do maths?

Background

We can think of Large Language Models (LLMs) as having been trained on all the text on the Internet, which is memorised in their model parameters. The more parameters that models have, the better their ability to recall accurately from this memory.

Frontier Models that a few years ago were using 8 billion parameters are now using hundreds of billions or even 2 to 3 trillion parameters (GPT4.5 and Grok 3).

This ability to know the best next token to continue a word or sentence is key to language models. Remember that a token is not a single letter but a string of arbitrary length as determined by the LLM in training, which may be quite long and is represented by a numeric token (see Tiktonizer).

So what does this mean when we ask an LLM to do simple mathematics?

Memorisation of tables

Let’s use LM Arena’s Direct Chat tab and pick an older model, Mistral-Large-2407, which was released in July 2024 and has 123 billion parameters.

(Old is measured in months not years for LLMs, so the latest Mistral models are now up to 2502).

And ask it to do a simple multiplication, in this case 6 *12.

We see from above it immediately returns with the correct answer of 72.

In the same way that we all memorised multiplication tables at primary school, by repeatedly reading and committing to memory that 6*12=72, LLMs will also have seen this text many times on the Internet and have consequently memorised that the answer is 12.

What if we now ask for a multiplication that we would not have memoized?

Lets try 12*14 on the assumption that we all memorised multiplication tables up to 12*12 and not beyond.

So presented with this, I would split this into (12*10) + (12*4) in my head, which I know are 120 + 48, which I can mentally sum to get 168.

What does our LLM do?

Seems like it has memorised tables to beyond 12, so it returns 168.

Memory is not perfect

Now as we know trying to remember too much, results in lossy memory i.e. we can recall most but not all of something. LLMs behave similarly.

So given a multiplication that they will not have seen on the Internet, or seen so in frequently that their memory is not perfect, they may try and guess the answer.

Let’s try, 1256*1478=?

Interestingly Mistral breaks down the calculation into steps and then confidentially states the answer as 1,855,688.

However using a calculator I can see that the correct answer is 1,856,368.

Looking at step-by-step calculation, the steps are correct, however the result of 1256*8 is wrong as is the result of 1256*4 shifted two places) and the sum of the four numbers it shows would be 1,755,632!

So clearly a lot of imperfect/lossy memory happening here.

Meaning we have to be careful giving maths problems to LLMs or do we?

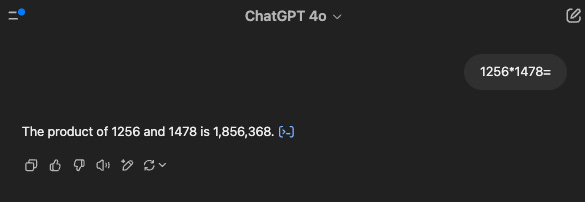

Let’s double check with GPT-4o-2024-11-20.

Oh dear, close but no cigar!

The LLM confidently asserts the answer as 1,856,968 (from memory) when it should be 1,856,368, out by one digit in the fourth position or 600.

Note, I pointed this out to the LLM and after it offered to show it’s steps, I told it which step was wrong and it eventually returned the correct result.

Tool Use

However, before you despair, all is not lost.

If instead I go to the ChatGPT webpage or Mobile App instead and enter the same prompt, having selected 4o as the model.

I get the correct answer of 1,856,368.

How is this possible?

The blue brackets next to the answer can be clicked on, to show how ChatGPT got to the result.

Aha.

ChatGPT figured out that the way to answer this was not from memory but to use python code, so it converted the input prompt to computer code, which it then ran to get the result.

In much the same was as we would turn to a calculator or Excel and input the numbers, instead of trying to work out from memory and mental maths.

The use of tools such as calculators, code, Internet Search, etc is a key capability of LLMs.

All the Apps you are likely to use from the main vendors, will also recognise mathematics and give correct answers.

I verified that in ChatGPT, Claude and Gemini.

Complex Maths

Asking Google “how LLMs solve complex maths problems” returns the following in the AI Overview.

Along with more explanations below covering key points and limitations, which I will leave you to try yourself in search.

That’s all for today.

Learnings

LLM memory is similar to ours, just far larger in reach and depth.

When presented with simple arithmetic LLMs can answer from this memory.

Just as we humans do, as we have memorised our multiplication tables.

However this can quickly go wrong with larger calculations.

So LLMs can recognise maths in prompts and will switch to using tools.

Such as computer code or symbolic solvers.

Meaning they can do a lot of maths. (Achieve > 80% in hard maths.)

We should be cognisant of how LLMs work.

So we know how to use them safely.